Introduction

Hi, I'm Josh. I'm a programmer, hardware hacker, and parent. I put this together to hopefully give an accessible and reasonably well-rounded introduction to who I am and where my skills and interests lie.

Please use the left navigation (hamburger icon on the top left, if it's collapsed) to explore different things I've done, and feel free to reach out to me if you have any questions or want to chat.

Regards,

Josh

TLDR Inc

I was the first hire at TLDR Inc, a business intelligence company developing novel natural language processing techniques to identify language trends in the market and providing insights into how different brands were being represented in the media landscape. My relationship with the idea behind the company actually began during my time with my startup OokTech, but due to COVID I had to step away from the project for a time.

While at TLDR I...

- Brought two products to market over a year

- Architected and implemented the frontend and supporting backend services

- Worked with the product owner to continuously iterate on the base designs, leveraging rapid prototyping to prove out ideas and identify flaws quickly.

- Continuously worked to improve deployment strategy with the operations manager.

- Refined and documented the backend data API to permit more flexibility and better align its capabilities with business needs

- Used lessons learned from first release to vastly improve the second

- Built human-friendly tools for visualizing and parsing large datasets

- Designed and built visualizations that scaled to 100,000+ item datasets while still being performant and relevant to the user

- Engaged in empathetic design, using natural sequences of operations and manipulations to help the user tell their story

- Actively engaged in product design

- Conceptual goals, visual design, and user experience considerations

- Leveraged a balance of collaborative "brainy" design with building rapid "real" prototypes to identify the unknown edges in our understanding

- Developed timelines to align resources with business needs

- Coordinated with stakeholders to establish requirements

- Allocated developer resources to ensure unknowns were identified early

- Adapted to changing requirements/understanding as the products matured

- Worked directly with users

- Developed onboarding technical documentation

- Provided direct support to new customers

- Solicited feedback to align our business's understanding of the domain

- Remained flexible in the face of unknown developments

- Ensured that my designs would be adaptable as our understanding of the domain matured

- Provided alternative solutions to technical and logistical problems that arose during development and launch

My Role

Our product team was very small; I was one of two developers (the other being a founder), with support from an operations guru, a data analyst, a project manager, and the product designer. As a result, I had extensive reach in both responsibilities as well as influencing design and architectural decisions - my previous relationship with the founding IP, as well as our team's culture of knowledge sharing, played a large part in supporting this.

My official role was that of frontend developer, developing the data manipulation and visualization tools that served as the core interface to our data, as well as essentially every other customer-facing portion of our product. In addition to that role I also managed backend services, deployments (with the support of our operations manager), database configurations, and many other tasks. It was a great place to be able to leverage my experience across the stack and knowledge domains to keep things moving fast.

I had the support of a fantastic team, in particular a product owner/designer who provided fantastic starting materials to design against, as well as welcomed suggestions and collaboration. Collectively we founded a culture of mutual respect and honesty, and I am honored to have worked with them.

My Key Takeaways

The small team and wide berth of responsibility that come with being a first hire made for a unique and rewarding experience, both personally and professionally. I greatly appreciated the effort and talent that we all shared toward a common goal, and how much we fostered a respectful and supportive culture. I would not have been nearly as successful, nor have learned as much as I did, without the support of my team. While there were too many lessons to list, these are some of the key points I took away from my time with TLDR.

Going from Zero to One

I've built many projects, and deployed several to customers, but not with the same level of granular involvement in each step of the process I experienced here. Being able to start from scratch, build a product, and then put it into customers hands was a hugely rewarding and enlightening experience. Doing it twice, and having the second time go significantly faster and with far fewer hiccups, was a major validation that I have a better understanding of where the true difficulties lie and that I am capable of carrying these lessons forward.

Architecture + Longevity

As an initial hire, I had huge latitude in how I implemented the product. To temper that, the product likewise had a lot of latitude to evolve as we better understood our audience and use case. This placed me in an interesting place because I had no guarantee that any choice I made would hold up to the test of time. Over time, however, I learned that my choices (largely) did indeed hold and proved to be flexible enough to survive several major external disruptions. For those choices that wound up not being tenable, I found that they had at least bought myself and the business time to better understand the shape of the problem - and that their successors were made more obvious as a result. I think the strategy of quickly iterating, even if the domain is not entirely known, is crucial to developing a more complete understanding. And be prepared to throw away the bits that don't work - but in their absence, the shape of a solution may appear.

General Dynamics/Plexsys

General Dynamics (and Plexsys Interface Products) are government contractors - in this case, contracting with the US Air Force. I joined a team that created software to support distributed simulations performed by the Air Force, and I rapidly became familiar with a variety of new networking protocols through a variety of projects.

While at GDIT I...

- Wrote implementations of protocols based on published standards

- Translated technical specifications into practical code

- Used these specifications to build support tooling

- Stood up a virtualization and CI/CD infrastructure on an airgapped LAN

- Established a staging area where we could deploy and test easily

- Standardized and automated builds, which had been a series of undocumented manual scripts

- Introduced containers to improve developer efficiency

- Due to airgapped nature, containers allowed much more efficient onboarding

- Demonstrated potential benefits of production deployments

- Created support tooling

- We didn't have many ways to effectively test some software out of production

- Created tools that allowed us to simulate situations and recreate bugs

- Debugged a lot of multithreaded C++

- Dug through core dumps on airgapped systems to identify heisenbugs

- Identified and rectified incorrect use of mutexes (deadlocks, etc)

- Used my tools to reliably reproduce errors

- Mentored teammates and adjacent peers

- Assisted junior developers with assigned tasks

- Gave teaching sessions on Git, programming styles, specific domains

- Wrote + performed standardized test protocols

- Learned from our team's Testing Engineer how to write effective formal test documentation

- Performed formal integration test procedures

- Fostered cross-team collaboration

- An independent team was tasked with performing Acceptance Testing of our releases, but communication gaps led to historic misalignment of testing expectations

- I worked with my team lead to revise the processes in place to align both teams interests and promote open dialogs

- This lead to a huge reduction in inter-team conflict and failed testing results

My Role

I served in a full stack capacity here, developing at a systems level up through to web frontend development. I also spearheaded operational improvements, such as introducing virtualization environments for improved testing as well as automated packaging pipelines. Over time I became a mentor to new hires, and developed more documentation and processes to support their onboarding.

The development team was relatively small, and going through a phase of growth. There was a healthy amount of institutional knowledge in the veteran members, but the team was in a period of evolution as we adopted new technologies and techniques. I began by absorbing as much of the institutional knowledge as I could, and over time I began establishing new processes to help disseminate that knowledge as new hires came on.

A significant part of the work consisted of systems programming, in particular networking tools that parsed, filtered, routed, or otherwise handled the simulation protocols. I ultimately took part in designing, implementing, testing, and supporting these tools - learning from the experts on my team along the way.

As we continued to grow our team, I had the opportunity to mentor junior developers and provide supporting infrastructure for them. This included one on one code reviews, writing documentation, establishing protocols and processes, and teaching sessions. I tried to take my onboarding experience and smooth out as many of the rough edges as possible for them, while also enabling them to take ownership of their learning. Having the opportunity to share my knowledge with them and hear their perspectives was a very rewarding experience.

My Key Takeaways

I am very grateful to have been a part of a team that was so willing to impart their knowledge onto me, and to then be able to return that favor to the new members as the team continued to grow. Working for the government was a distinct experience, one marked by process and constraint. However, I found that within those constraints we were still able to find ways to make our process more efficient, improve our relationships with other teams, and expand our capacity to take on new projects. These are some key points I carried with me from the experience.

Respect for Process

There are many times when I would prefer to move unencumbered by formal procedure, perhaps even most of the time. And at small enough scale a very light layer of process may be appropriate - but I saw that as things scale up, process was the only glue that kept the system together. Even if kludgy, having defined roles and steps in place ensured that everyone at least knew what to expect. Having the opportunity to see what happens when process can be eliminated, and likewise seeing the consequences of removing it inappropriately, has recalibrated my mental gauge. Moving forward, I now have more tools at my disposal to leverage process when appropriate, and a better sense of when that might be.

Operations and Tooling

I was incredibly fortunate to have a team lead who was supportive of me exploring opportunities to improve developer ergonomics. I learned a ton from standing up a CI/CD and virtualization environment in an airgapped network, and seeing how much it helped our team save time and energy was incredibly rewarding. Likewise, developing internal tools that assisted with reproducing bugs and provided avenues for automated integration testing was a great learning experience. Despite the constraints of the environment, I learned that there were still many ways that we could work with those constraints to improve our experience and produce better software.

OokTech (Self Employed)

I was fortunate to have the opportunity to try my hand at a startup working with my brother. Our specific work varied over time, but our core interests always revolved around creating tools and experiences that center around the humans that use them. Whether its exploring AR and robotics or making a tool to keep people from touching their face during COVID, I want to use technology to give people opportunities to learn and improve their lives.

OokTech's Fate and Lessons Learned

Unfortunately COVID spelled the definitive end of OokTech, as our business relationships were unable to survive the changes that came with lockdown. However, I don't lament the change as it opened many opportunities for me to find new ways to grow. I learned many things from my business, such as:

- Self motivation

- As one of two people, I had to work to keep the business alive. This imbued a very strong sense of ownership and responsibility in my work ever since.

- Project Management

- I was responsible for planning, designing, and building every project.

- Learning how to work with external stakeholders and effectively communicate requirements has been a core skill in my work.

- I learned the importance of understanding what "knowns", "known unknowns", and "unknown unknowns" are when planning and executing a project.

- Literally every piece of "The Stack"

- I designed circuits, laid out PCBs, and wrote software to interact with peripherals at the register level.

- I learned to be comfortable in the middle of a thousand page microcontroller manual.

- I designed applications on desktop, mobile, and web.

- Managing Customer Relationships

- I was the company representative at major deployments, and traveled to ensure that their vision was being executed as planned.

QCTouch

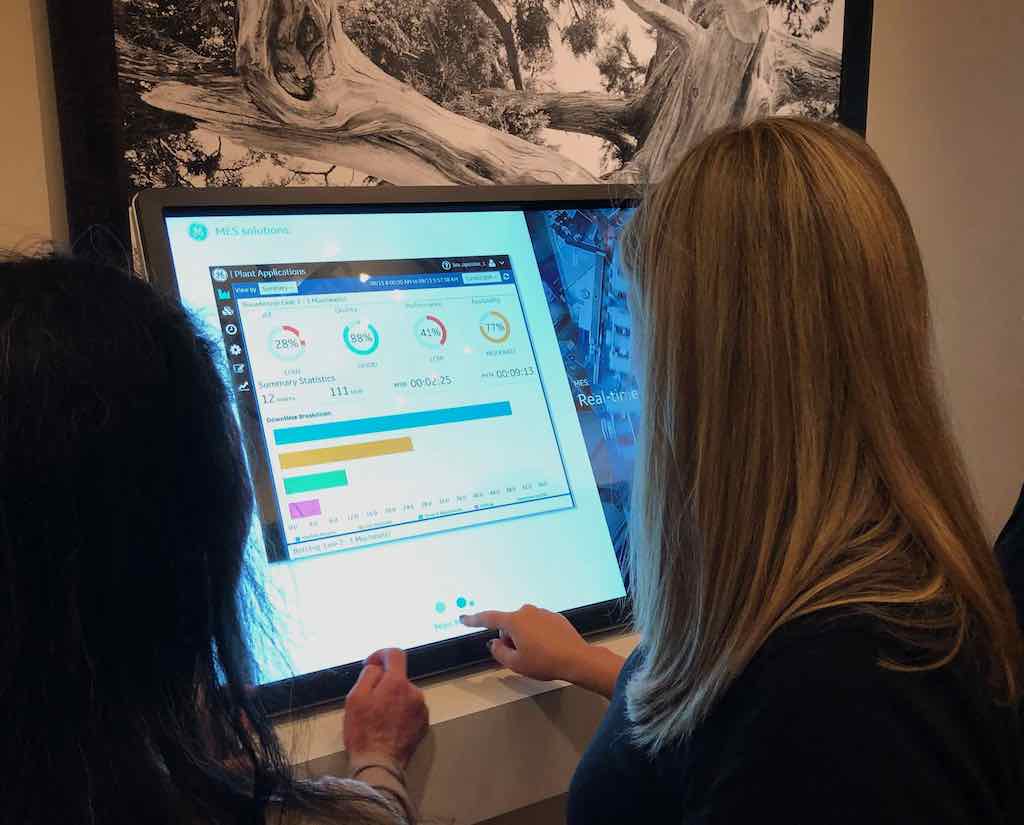

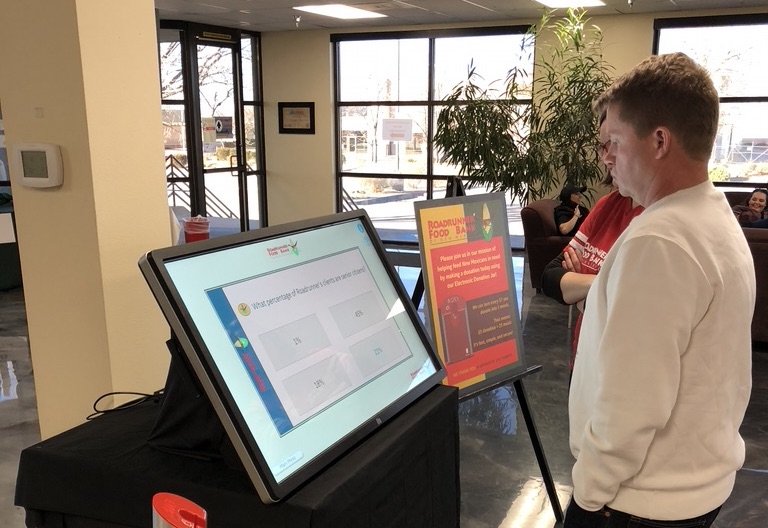

Deployment of QCTouch for a GE Digital Event

Deployment of QCTouch for a GE Digital Event

QCTouch was our custom solution for interactive touch experiences to let people explore products and organizations. Designed to be flexible for various cases and industries (see here for it in action for a non-profit), we worked with clients to tell their story and develop ways to make interesting and human-centric experiences.

Software

- Deployed as an Electron app

- Primarily to enable filesystem access, among other benefits

- Mix of React/Polymer/misc WebComponents to support client assets

- Lots of vanilla JS mixed in

- Cross platform

- Deployed on Mac Mini, small PCs running Windows, Linux

Lessons Learned

Reaching the user

Among the many lessons, one of the most significant was how to prime people for interactive experiences. So many of our digital interactions, especially with large screens, are passive and it took some iterating to find techniques that indicated to the audience that they could engage with the screen. Even small indicators, like gentle pulses over interactive elements, had large a large impact on user engagement. Over time we developed metrics that tracked where on the screen the users were touching for each view, which enabled us to learn what they thought was interactive and refine our experiences.

Editorial Liberties

Another lesson related to adapting the source material, such as a client's product, to an interactive experience. In most cases the products themselves were not something we could ship embedded in the experience, so we had to mock the relevant parts. This was an interesting challenge, as we had to remain true to the source but also take into consideration how a user would interact with this proxied presentation. We quickly learned that it was not necessary to construct a true 1:1 representation, but rather focus on the pieces that spoke to our client's key points. This careful balance paid off, allowing us to remain flexible in the face of changing focus while also giving us more time to focus on the quality of the experience.

Robbie Roybut - Exploring AR Experiences

Robbie at Invalides, Paris

Robbie is a semi-autonomous robotic platform, resulting in 4 iterations (so far), with the mission of exploring new ways to create augmented reality experiences. Designed to provide a robust platform for navigating both interior and exterior environments, we used it to capture 360 degree video of various environments that we then edited with our custom AR annotating software to enrich the experience. Robbie was deployed at trade shows in Germany and the US, as well as many tours around Paris. We were hoping that we would be able to establish a relationship with the museums and other cultural sites in the city to begin building a catalog of educational AR experiences that people could enjoy from home.

Hardware: What makes the robot tick

Robbie is composed of several systems, interacting in tandem to provide a smooth navigation and recording experience. We designed Robbie from the ground up, and learned more from each iteration to improve the design.

- Raspberry Pi

- Primary brains

- Bluetooth connections for control

- Uses Bluetooth GATT to provide services/characteristics for control

- Communicates with other hardware via USB Serial

- MPU9250

- 9 axis IMU, contributes to navigation

- Rotary encoders (motors)

- Record how much each wheel moves, contributes to navigation

- Ultrasonic collision prevention

- Detects when surfaces are approaching to prevent crashes

- Motor driver

- Provides safe interface between low voltage control system and high voltage motor system

- Teensy

- 32 bit ARM microcontroller

- Interfaces with MPU9250 and other sensors

- Performs sensor fusion to give Robbie a sense of direction and orientation

- Automatically course corrects to compensate for slippage, slopes, etc.

- Provides dynamic power to wheels to overcome obstacles such as curbs, etc.

Software: What makes the robot tock

- Raspberry Pi

- Node.js with Johnny-Five and Bleno

- Johnny-Five gave some convenient interfaces to the Teensy

- Bleno provided a bluetooth API

- Node.js with Johnny-Five and Bleno

- Teensy

- Firmware written in C++

- Used I2C for MPU9250 communication

- Hardware interrupts for rotary encoders

- iOS: RobBoss iphone app

- Controlled Robbie via Bluetooth

- Desktop: RobBoss Electron app

- Used Noble to control via bluetooth

Lessons Learned

Friction, or how I learned to test on carpet

I had an amusing (thankfully recoverable!) experience leading up to Robbie V3's first deployment at a major event. V3 had much larger wheels to help give it more stability over minor imperfections, as well as being able to tackle larger obstacles on outdoor excursions. I had been testing on hardwood floors and other relatively smooth surfaces, and the larger wheels were performing exactly as I'd hoped. And then I drove onto carpet. Larger wheels require much more torque to spin, and the difference in friction meant that the power curves I had configured for turns led to a stuttery mess. Thankfully I discovered my oversight with enough time to adjust the power curves and wheels (the event floor was carpeted!) and V3 was able to attend without any embarrasing turns.

Don't assume that your test conditions are representative of the real world, and be prepared to make adjustments as conditions change!

People Love Goofy

We tested different outfits for Robbie, because we didn't want to alarm people as it was driving around. We would send it out naked, circuits and wires visible to any who looked, or we would send it clad in "clean" paneling. Invariably, the more polished we dressed Robbie the less people wanted to be around it. But when we sent it out battery flapping in the wind people loved it - some took selfies with the derpy robot.

Don't be afraid to have personality in your products. People relate to and are comfortable with things that look silly much more than sterile design-by-committee facades

Effective AR requires (very) high resolutions

When we began work on Robbie, the 360 cameras on the market were limited to 1080p per hemisphere. Shortly after, 4k/hemisphere cameras came onto the market. This proved to be a much more agreeable experience, but even with that increase the footage was grainy when projected into a 360 AR view. Despite having what we believed to be a compelling use case, we were unsatisfied with the quality of output and knew we would have to wait for future generations of hardware to get closer to realizing our complete vision. Now that the technology had had several years to mature, I'm interested to see if it is able to match our original hopes.

Sometimes ideas take time to bake and you need to wait on other factors to come into play before you can fully execute a vision

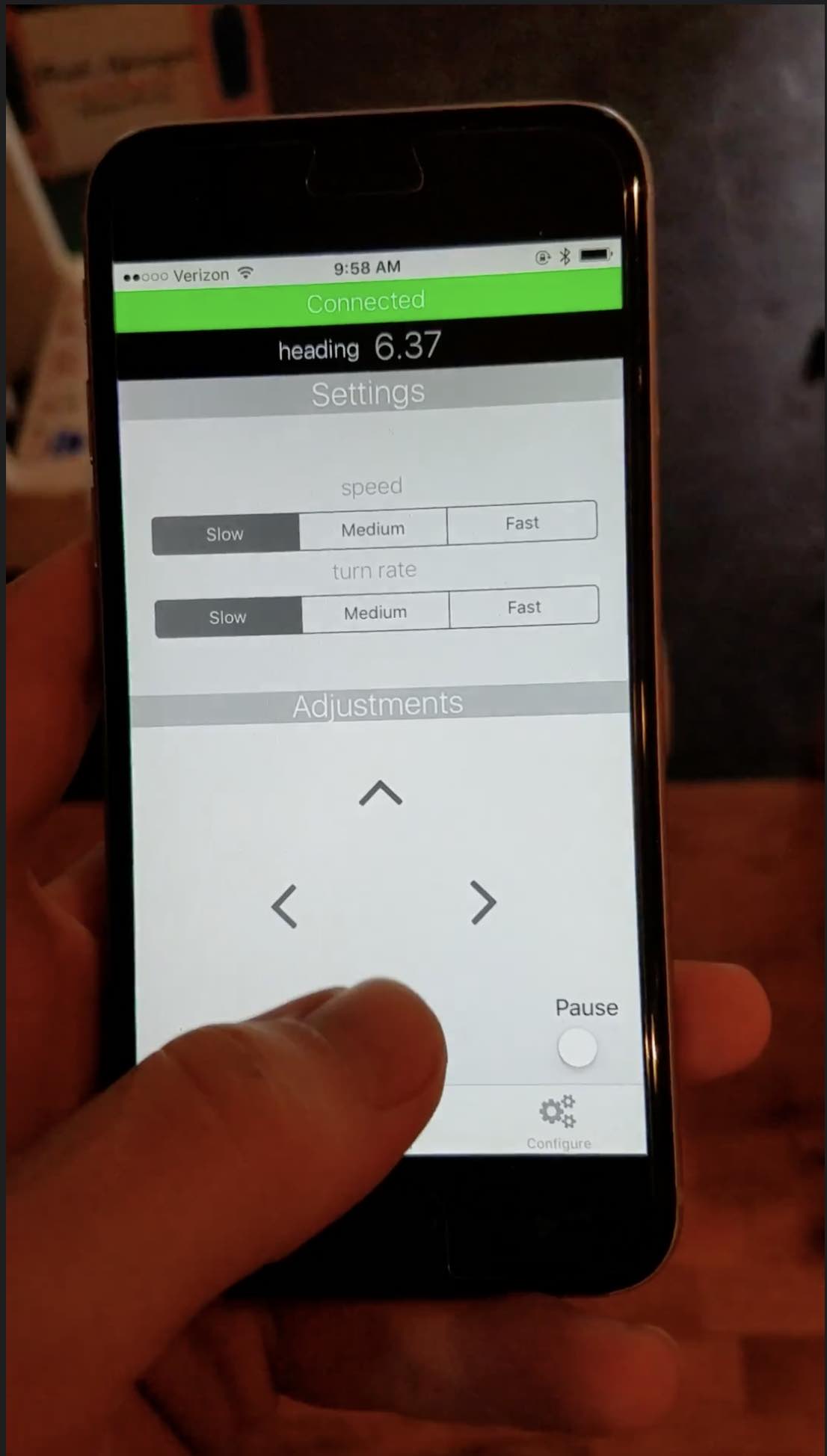

RobBoss - Robot Control

We needed a way to control our Robbie that was flexible and relatively nondescript. We explored and implemented traditional gamepad/joystick controls, but we decided a iOS bluetooth solution would be worth a shot. It wound up being a very practical choice because we were able to easily extend our implementation to support additional configuration in software that would not have been possible with a physical controller.

Enter iOS

I designed a very simple iOS application that interfaced via bluetooth GATT to Robbie. Robbie advertised GATT characteristics, which our iOS app wrote values to in order to control or configure it. It worked well, and iOS's bluetooth APIs are thankfully quite simple to interface with. Despite Bluetooths relatively limited range we were able to reliably drive Robbie further away than I found comfortable, which was a pleasant surprise.

RobBoss iOS

Enter Electron

For testing I didn't want to have to redeploy an iOS app every change, so I also developed a desktop application using "noble", bleno's counterpart. Electron made this very easy, as its node runtime let me run noble while serving my test interface without having to run separate processes. This tool was very helpful since I was able to demo changes to the bluetooth interface without having to make time consuming changes to the iOS code until ready.

Making AR Enjoyable

MARE was a really neat project. We had 360 video captures and we wanted to provide an augmented reality overlay to compliment what was being displayed. MARE was a tool that provided tools for both rendering and authoring these augmented layers.

The AR worked by rendering the video onto the inside of a sphere, projecting the flat image into the appropriate proportions. MARE then provided a persistent and relative content layer. The persistent layer maintained its position relative to the user, acting as a sort of heads up display. The relative layer was tied to elements in the video, and elements on this layer could go out of view as the user panned their head.

The AR author was able to walk through the video, placing elements in the environment to track key points of interest or provide additional context. We also explored computer vision algorithms for feature detection to enable automatic tracking of key elements in the video. With this relatively simple set of tools we were able to annotate videos, for instance following a landmark as Robbie drove by it, and give the viewer a deeper experience beyond just the visual media.

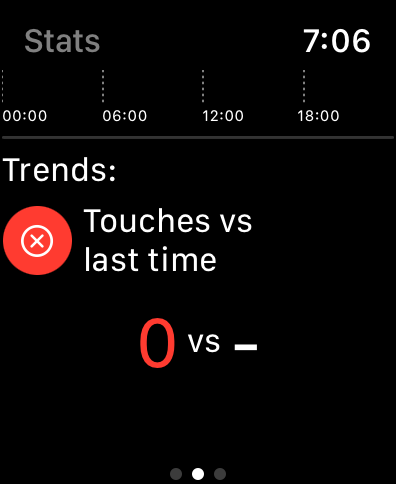

No Touchy

No Touchy was a product of COVID. With the disease spreading, we decided to create a simple application for the Apple Watch to help notify people when they reached for their face. We also included some metrics for the previous day, and some voice integration to speak to the user during calibration. Ultimately we were unable to publish the application to the app store due to time constraints, but the core functionality was essentially built out.

Software

- Built with Swift + SwiftUI

The software leveraged accelerometer data to attempt to identify when the user was motioning toward their face. This required marking the application as a "workout" tool, to expose the requisite app permissions. The app included a simple training method that established a rough bounding box of ranges where the user appeared to be touching their face. This method, while simple, proved to be largely effective. Given that we had to balance the computational complexity of the detection with the strict requirements from Apple to hit power consumption targets, we were ok with the potential for false negatives.

Sample simulator screenshot

My Stack

Some notes on my technical setup, and things I play with.

Tools I Use All The Time

- Neovim

- Helps me focus on my task

- Marks make navigating through code fast

- I enjoy the modal style

- tmux

- Swap between my processes really easily without having to juggle terminal tabs/windows

- Being able to ssh in and continue from where I was is fantastic

- VSCode

- Helpful for me to look at/skim projects with a big picture view

- Git

- I version everything :-)

- I self-host a git server as a project store/offline backup

- Figma

- Figjams are one of my favorite mind-mapping/collaborative tools

- Helps with understanding big/new concepts and requirements

- Notebooks (the physical kind)

- I write/draw/braindump a lot on good old fashioned paper

- It helps with big/nonlinear problems since I can draw lines and write all over.

- Docker/Containers

- I never want to install MySQL on a personal machine again

- I use it to enable developing systems that would normally be distributed across machines on a single one instead

- Convenient deployment vector once the work is done

Programming Languages

Here is my breakdown of programming language experience, along with a blurb about each for context.

Languages I Prefer To Use

This list is really commentary on what I find valuable in my tools and what languages or features have served me well in an abstract sense. I realize that not every project will require or merit such consideration, and I am very comfortable working across languages to achieve the desired result.

Rust

If I'm going to be writing in a systems level language, I would take Rust any time. Many of the painful errors that I have experienced in C++ such as data races and nullability are caught at compile time. Furthermore, an actual package management system (cargo) makes managing projects much easier.

However, those are some of the basic arguments for Rust. Here is an example of why I want to use the language.

It's not always rainbows and butterflies, but I believe that the expressiveness and guarantees that rust provides outweigh its cons.

Anything with explicit error handling

Non-exceptional error handling removes a massive set of logical errors due to oversight or implementation errors. Exceptions are part of an interface, but we often do not have the tools required to adequately guarantee that that interface is correctly used. Explicit error handling through the type system gives a much clearer and stronger contract, which in my experience leads to far fewer errors.

Anything with strong typing

Unless the project is truly small or a one-off script, I prefer to have explicit types - no duck typing! There are certainly advantages to dynamic languages when prototyping certain types of applications, such as not having to define a JSON schema up front. However in my experience types serve to not only document the interface and related data, but also hugely reduce mental overhead thanks to support from tools like LSPs.

Languages I Have Built Complete Projects In

C++

I am very comfortable with C++, having written a fair amount in both embedded and desktop environments. I enjoy the ability to be close to the hardware and develop highly efficient programs, despite the challenges that come with explicit memory management. The inclusion of smart pointers, as well as support for other high level language constructs, makes it a much safer and more compelling target. The primary reason I don't include it in my "preferred" list is because the tooling and related project management overhead are not as uniform or streamlined as other environments.

Javascript/Typescript

I have written extensively in this language, building many tools and products in both browser (React, Solid, jQuery, vanilla) and server (Node.js) contexts. It is a very convenient language for rapidly putting something together, but it suffers the same flaws as any dynamically typed language when projects begin to scale and interfaces become more complex. Typescript, while an improvement over Javascript when it comes to refactoring and LSP integrations, falls very short thanks to its duck typing and limited support for true interface definitions.

Python

I've used python to develop with NLP as well as server-side web applications. It's not my preferred style (I dislike the choice to have whitespace being significant) and sometimes the lack of explicit typing makes following data transformations harder than it should be. However, I've used it to great success both in production and research contexts and its scientific packages are very powerful and expansive.

Swift

I've used Swift to produce iOS and WatchOS applications, as well as implementing some audio processing CLI tools. It has many lanaguage features that I enjoy using, such as pattern matching and optionals. It's developed a fair amount since I used it last, so I may have some catching up to do to get up to speed on the latest features.

Stuff I'm Exploring

-

Go

- Interested in using it to replace Node.js for lighter server/middleware tasks

- Static typing + ease of concurrent/parallel execution

- Benefits of compilation

- Much higher performance ceiling

- Ships statically linked binary, so no additional runtime requirements

-

OCaml

- I want to learn more about purer functional style, without diving to Haskell

- Its type system is as rich, or richer, than Rust's

- Has garbage collection to remove the mental overhead of managing lifetimes in Rust

- Looks like solid compliment when absolute performance isn't required

-

Applied Machine Learning

- Speech to text

- Tested Mozilla's Deepspeech and OpenAI's Whisper

- Found OpenAI's offering to be significantly more aligned with my requirements, given fairly limited testing

- Exploring wrapping it into a tool to help me transcribe and manage my audio notes

- Tested Mozilla's Deepspeech and OpenAI's Whisper

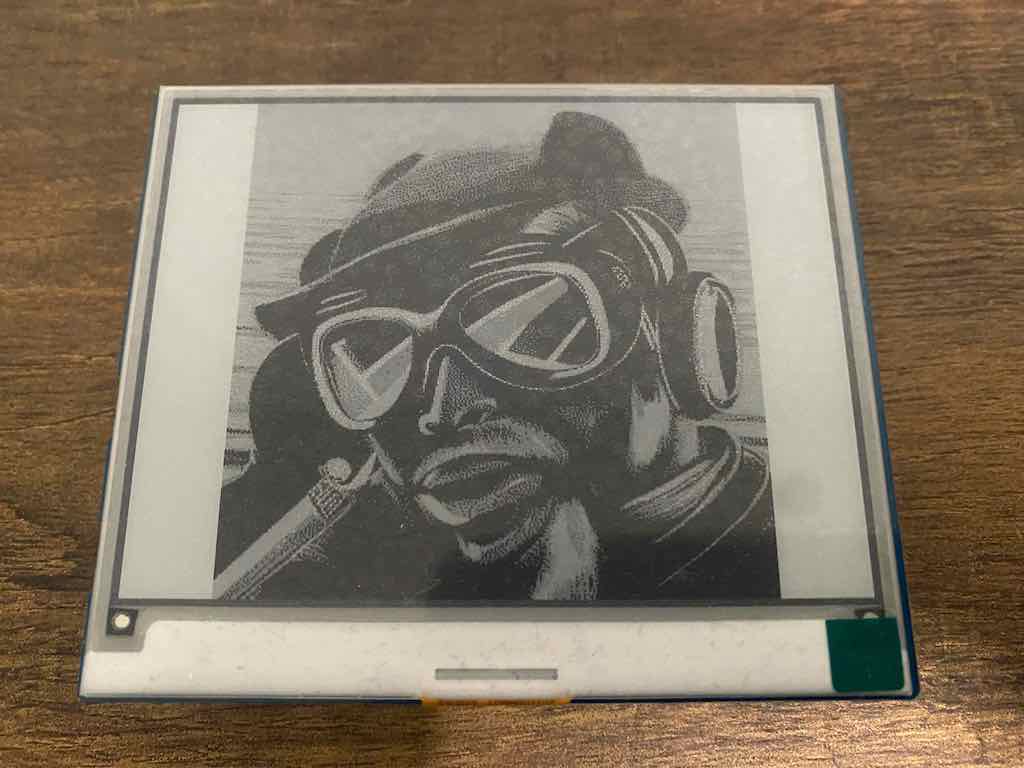

- Stable Diffusion

- I'm not a great artist, so having a tool that lets me approximate the image in my brain has been fantastic

- It makes for good test art with my eInk displays

- Large language models

- My current interest lies in leveraging an LLM as a frontend against a backing knowledge base

- E.g Documentation explorer, Wikipedia assistant

- Vector databases

- Exploring uses for vector encodings for efficient information retrieval

- Speech to text

Why I Like Rust (... or any sufficiently expressive type system)

The fundamental flow of systems is to prepare some state and execute on that state when ready. Bugs arise when execution occurs with invalid state. We see this when making requests to servers with invalid parameters, when attempting to interface with hardware using incorrect configurations, and in many other cases. Often, the solution to such problems is exceptional code flow at runtime. You have to attempt a process, and then catch any errors that arise. There are other patterns that help mitigate this issue, but many put the onus on the programmer to keep track of this meta-state.

What if, instead, we had protections and guarantees within the type system to enforce proper handling of state? That way, the user couldn't accidentally call a function at an inappropriate time, or act on data that wasn't ready. The following code snippet demonstrates the use of "zero-sized types" to ergonomically prevent accidental use of certain interfaces until they are valid to use.

// Demonstration of using zero-sized types to give static (compile time) // guardrails to prevent misuse of an interface use std::{error::Error, marker::PhantomData}; // Zero-sized types to distinguish between operating state pub struct APIRequestUnverified {} pub struct APIRequestVerified {} // Holds configuration for our request pub struct APIRequest<State = APIRequestUnverified> { // Cool Zero-sized type - Takes no space at runtime state: PhantomData<State>, // API Requirement: Must have at least one of these set // before any API call is valid part1: Option<String>, part2: Option<String>, } // This implementation applies to all instances of APIRequest impl APIRequest { pub fn new() -> Self { Self { state: PhantomData, part1: None, part2: None, } } } // This implementation applies only to APIRequest's with Unverified status // This provides a builder pattern to configure the request impl APIRequest<APIRequestUnverified> { pub fn part1(mut self, part1: Option<String>) -> Self { self.part1 = part1; self } // ... Same builder setter for part2 // Note the return type pub fn build(self) -> Result<APIRequest<APIRequestVerified>, Box<dyn Error>> { // 1. Enforce our requirement that part1 or part2 are valid // 2. If invalid, return Err // 3. If valid, return APIRequest with phantomdata type of Verified Ok(APIRequest::<APIRequestVerified> { state: PhantomData, part1: self.part1, part2: self.part2, // Populated with builder values }) } } // This implementation only applies to APIRequest's that are Verified impl APIRequest<APIRequestVerified> { // Do something now that we have valid API parameters pub fn make_request(&self) -> Result<(), Box<dyn Error>> { Ok(()) } } fn main() -> Result<(), Box<dyn Error>>{ println!("Hello, person actually compiling this demo!"); let request = APIRequest::new(); // Uncomment this to generate an LSP/compiler error! // This function is not available for request yet! //request.make_request(); match request.part1(Some("Hi".to_owned())).build() { Ok(req) => req.make_request(), // Now that it's verified, it is! Err(e) => return Err(e), } }

This code is compilable, just be sure to select the "Show hidden lines" icon before copying.

This idea extends far beyond simple API requests. In embedded development, we can use the same patterns to guarantee, at compile time, that we aren't attempting to use the same hardware resource two different ways at the same time. This level of expression, and the mental offloading it provides me when I code, are why I enjoy writing rust.

School

I earned a Bachelor of Science degree in Biochemistry. I've always been interested in the life sciences, and at one point considered pursuing medical school. However, I had an opportunity to leverage my interests in technology and I decided that was where I wanted to contribute instead.

My education in science, and biochemical systems, gave me an opportunity to have a different perspective on my work. Our world is composed of microscopic mechanical systems that are simultaneously incredibly simple and amazingly complex. One of my biggest lessons in science is despite all of the things we have discovered, there is so much more that we don't know. I choose to accept this ignorance and sense of wonder in my life and work, and approach problems and systems with an understanding that there are many possibilities that I have no knowledge of - and that I should keep my mind open to alternative paths. This approach has served me well, and allows me to embrace a life of learning and exploration.

Embrace the limits of your perception, and seek out alternative lenses to view the world.

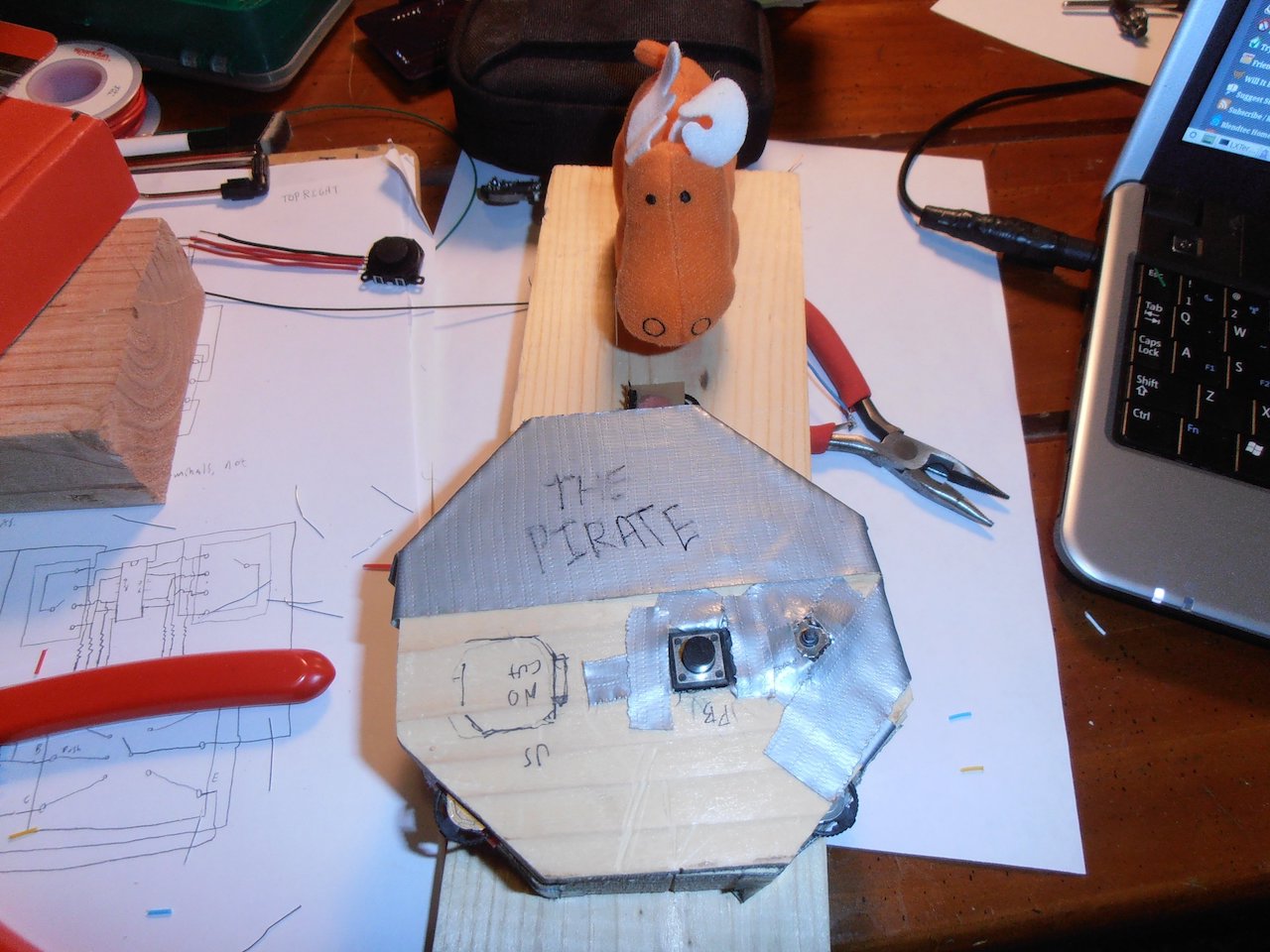

The Pirate

The pirate was one of my first completed hardware projects. It was (an admittedly bad) chorded keyboard - that is, a keyboard where combinations of keys are used to write letters. The point was that with a few rocker switches, a joystick, and a button, you could control your computer pretty effectively with something that fit into your hand.

The Hardware

- Arduino Leonardo

- Used because it had native USB

- Rocker switches (3 inputs each)

- Flat joystick

- 5-way switch

- Push button

- Maybe a SPI I/O expander... I don't recall

- Duct tape - how else to keep it together?

The Software

The software was written in C++ using the Arduino IDE. The Leonardo was configured to act as a mouse and keyboard, with the joystick and push button acting as the mouse inputs. Aside from I/O mapping, the core of the code was a mapping of button combinations to keyboard key presses. The chord combinations were assigned based on probability of use - so the most common letters had the least complicated chords. There was also a 5 way switch, which acted as a mode selector - it could be "shift", "control", etc. etc., or it might change to a different chord set.

Lessons Learned

Two primary issues arose, both around ergonomics. The obvious one is that hand cut blocks of wood aren't necessarily the nicest thing to hold - at least not with the quality of work I put into it. The real issue however was that the switches were not mechanically suited for the task. The idea was sound, but they could get caught in the middle and therefore you couldn't go very fast. I think with a similar but more slidable switch design and a revised case (3D printers exist now...) it would be a fun project to revisit.

Annotated Video - A learning tool

Forgive the programmer art - this is a mock.

Annotated video was conceived as a way to help provide context to visual media. Videos are a fantastic learning resource, but they suffer from several drawbacks:

- They are not interactive

- They are difficult to update

- Finding a specific topic within a video is hard

The point of annotated video was to give creators and users a way to interact with visual media differently. The annotations on the right are interactive and can contain rich content. Each annotation is additionally pinned to a timestamp in the video, which gives the user a readable, scrubbable interface to video.

In the original case, the idea was that I could teach a friend how to use formulas in Tableau. While a video might be sufficient, it's hard to come back to as a reference. Furthermore, any code that I display on the video is not copyable - and in the case of a bad streaming condition, possibly illegible. So instead, or in addition to, I can place the code in an annotation. That way, my friend can skip to the code in question, can see the relevant context, and hopefully be more successful in their learning.

Document Generation

Nonprofits often have to generate a large amount of thank you mail. Sending a response to a donation increases the likelihood of the donor giving again, so being able to send an acknowledgement accurately and quickly is an important business consideration.

I worked at such an organization, and the process for generating those thank-yous was painful. It consisted of mail-merging in Microsoft Word, with some prep work in Excel to get things lined up. To complicate things, different donations had different thank you messages. Gifts in kind had to reference the gift, scheduled donations had to be skipped, and many other nuanced and specific rules. It was all done by hand. A human had to take all of the donation data, sift through the pieces, organize them, export different files per template type, then go through the process of loading each into Word and printing them. At one point, that human was me.

Honey I Built a Tool Again

So I built a tool in C# that bypassed the whole thing. It takes in a CSV of

donation data, straight from the CRM. This CSV contains all of the fields

necessary to perform the logic required to determine what template a donation

should associate with. I developed an XML schema (I know - but I promise it's

friendly to non-techies) that allows users to define rules. These rules are sorted

such that the most important template type triggers first, falling through to

the default if none of the rules are met. It even supports defining aliases for

mapping from the CSV field to the {Template} in the source document - so

instead of {Billing State/Province} in your template, you can alias that field

to just {State}.

The result? Given a CSV, in a few seconds you are presented with an output folder with both the envelope Word document as well as the thank-you document, sorted in the same order and ready to print-fold-and-ship.

The Software

- C#/.Net 5

- DocX (non-FOSS library for OpenXML manip.)

- Ultimately I'd probably either find a FOSS thing or write a library to do just enough for what I need

Lessons Learned

I got to work with C#, which was an interesting experience. It's definitely more verbose in some ways, but it has a really convenient parallelization mechanism that I was able to leverage essentially for free to speed up large batches.

The DocX dependency not being freely available was the biggest sticking point for this project. Its not too expensive to license from them, but I wasn't going to bother with selling this anyway. Despite being a huge speed improvement over the original process, I know that things could be faster and I suspect that more optimization could be done, possibly with a homegrown (and libre) OpenXML library.

All in all it was a good run. My contact with the organization left shortly after I finished the tool, so I didn't have the same foot in to introduce it. I might revisit it someday, but it's not the most interesting problem - there are a ton of template tools. However, it was really rewarding to be able to eliminate a huge amount of busy work that I was subjected to with a tool that I built.

QCTouch - RRFB Edition

Home screen for the Roadrunner Food Bank interactive experience

Home screen for the Roadrunner Food Bank interactive experience

Deployed for the Roadrunner Food Bank Souper Bowl

Deployed for the Roadrunner Food Bank Souper Bowl

Roadrunner Food Bank, New Mexico's largest food bank, hosts an annual event called the "Souper Bowl" to raise money and awareness. I had the idea to create an RRFB interactive experience for the event to give people a way to learn about the food bank while they waited in the lobby. I pitched it to my old boss who worked there and they said if I could do it in time they'd be happy to have it for the event. I had around a week - so I gathered information from their published resources, designed a story flow, created some interactive questionaires, and put them together into a coherent experience. I was able to leverage our existing system, adding in new interactive modes and styling to make the experience fix the food bank. The night before the event I deployed the system, and the next day it gave attendees a new way to learn about the food bank's work.

Software

- Same stack as QCTouch

- Specific styling/copy/games unique for the food bank

Lessons Learned

It was awesome to be able to put something together so quickly. It was a great validation that the system we had implemented for QCTouch was flexible beyond our corporate use cases. It was also a good exercise in designing around a story, since I wanted to keep the focus on the food bank rather than on flashy tech. I know many people stopped to use it and take a look around, and I hope that they learned something interesting and that the food bank received more support as a result.

Home NAS

I like self hosting things, but generally I had used VPS services to do so. However, I got a really good deal on some used hardware: ~$70 for 32gb of ram, quad core i7 + ~$120 for 16TB of NAS drives. Ok, really really good deal.

Show me what you got

I settled on TrueNAS Scale for my OS because I wanted ZFS for redundancy,

as well as the flexibility of the Linux base system - I want to run containers

without a headache. I configured my drive pool as a raidZ2, meaning that two

of my 4 drives can fail without any data loss. That means I only have ~8TB of

effective storage, but that is plenty for my needs. Speaking of...

What does it do though

- Gitea Server

- Mirror all of my projects on GitLab and GitHub so I have a local backup

- Jellyfin

- Local media streaming. It's awesome to have a personal netflix if the internet goes out

- Tailscale

- Private VPN: I can access my home network securely even when I'm away

- Even access my Gitea/Jellyfin instances!

- NFS/Samba storage

- I can keep files on my NAS and access them from my other machines

- TimeMachine backups

- It's an backup endpoint for our Mac

Does it work?

I had a drive show signs of failure (come on, $120 was too good of a deal for them to be 100%) but TrueNAS warned me and I bought a replacement. I wound up not replacing it for a couple weeks and things kept trucking along. The replacement was incredibly easy; I basically popped the drive in, added it to the pool as a replacement, and it was resilvered in a couple hours.

Having access to Gitea and other persistent services is also a huge plus. It's nice to know that even if an online service goes down tomorrow, I'll still have the infrastructure to keep my work preserved.

Networked eInk Screens

I really enjoy eInk panels for their clarity and unimposing display charactistics. The purpose of this project is to build toward an ecosystem of eInk hardware and supporting software that will let me share images with my family in a distributed, low frequency network. eInk panels blend into the background like photos, and they can be slowly updated to give a taste of home from a few states away without being distracting.

This project leverages the rust language to power the embedded firmware and some supporting data manipulation. Rust works really well in this case as we can leverage the type system to make static guarantees that we aren't accessing hardware resources in competing ways, among many other benefits. This level of expression and static guarantees makes writing embedded firmware almost as convenient as writing desktop software.

Hardware and Software

- The module

- Panel/board is from Waveshare

- Powered by a Raspberry Pico W

- Firmware written in Rust

- Uses Embassy async framework

- Leverages embedded-hal to provide static, zero cost abstractions

- Extracts packed 4 pixels per byte and writes to panel

- Supporting Software

- Simple image processing procedure in GIMP

- Resizes image to match panel resolution

- Quantizes to 4 color values

- Exports as 1 byte per pixel 0-3 binary format

- Rust utility to pack 4 pixels into a byte

- 2 bits per pixel for 4 level grayscale

- Simple nodejs udp networking application

- Implements barebones protocol for sending binary packed pixel data

- Supports configurable MTU

- Simple image processing procedure in GIMP

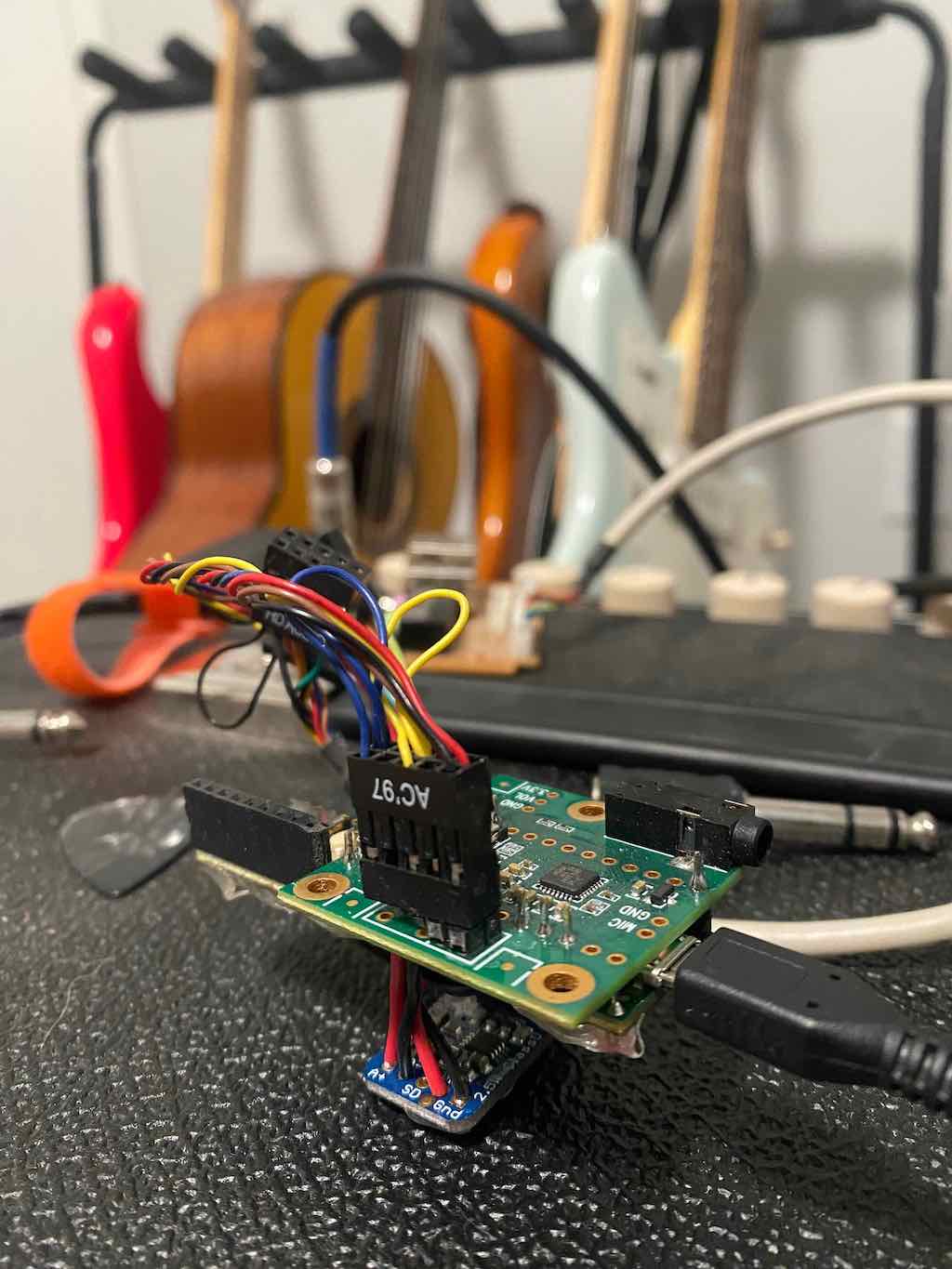

Guitar Pedal DSP

Bare testing hardware

I've played guitar since highschool, and I've always been curious about electric guitar effects and signal processing. Fortunately PJRC has an audio board along with supporting software that makes exploring this domain very simple. I've used the Teensy hardware (ARM Cortex M0, M4, M7) extensively, so this was a drop in solution. The audio board provides a codec that gives CD quality audio, which is more than adequate for my needs pumping crunchy guitar through an amp.

Complimenting the board is an audio library that provides high level access to the underlying hardware and data. The library exposes an audio object that presents the current audio buffer that can be arbitrarily manipulated and sent back to the speakers, which is a convenient hook into exploring custom logic. I use this in addition to implementing the audio libraries interfaces for my own effects to test and learn about guitar effects.

I've implemented a simple command interface via the USB Serial connection that allows me to configure parameters without requiring hardware controls. I use this interface to build GUI applications that can be run on desktop or on my phone to control the pedal and conveniently toggle and configure effects.

Hardware and Software

- Teensy 3.6

- Any Teensy with floating point support will work (3.x and 4.x)

- Audio Adapter

- 2.5W Amplifier

- Allows powering small speakers if not outputting to amp or headphones

- Cabling

- You'll want to adapt from 1/8" to 1/4" jacks to plug into guitars and amps

- Firmware in C++

Why mdBook?

This site is generated using a tool called mdBook which is a static site generator built by the people behind the Rust language. I give it markdown files, and it gives what you're seeing now. Why use this when I could spin up a fancy website? After all, I have written a lot of web code. It's quite simple.

Content > Sparkles

I want to share the important things, the stuff that I've worked on. If I put together a portfolio site from scratch, with the intent of making it shiny and super interactive, I would never get it done. I know, because I've tried it before. There are too many ways to make it fun and dynamic and...

So I am using a tool that allows me to focus on creating substance over, what I would consider in my own case, fluff. Because I want you to see me and my story, not a shiny toy I made for you.